Code examples

Introduction

Further to my recent post comparing the performance of a WebAssembly module written in Rust to plain JavaScript and WebGL shaders for rendering the Mandelbrot set, I have added an additional example comparing the performance of WebAssembly and plain JavaScript for the Floyd-Steinberg dithering algorithm. Check out the demo!

Floyd-Steinberg dithering algorithm

The Floyd-Steinberg dithering algorithm is used as a way to distribute quantization errors to neighbouring pixels in an image.

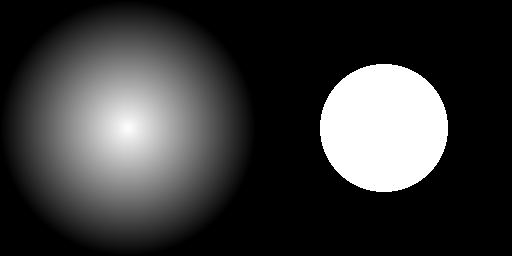

For example, when quantizing a greyscale image with 8 bits per pixel to, for example, 1 bit per pixel, each pixel needs to be converted from the range 0…255 to the range 0…1. The values 0 and 1 could of course represent anything, however to maximise the luminosity range of an image they would normally represent black and white respectively. A simple approach might be to divide the input number by 255 and then round to the nearest whole number. Therefore, an input value of 0 would be 0, 1 would be 1, 128 would be 1, 64 would be 0, etc. We could of course change this “threshold” where the input maps from 0 to 1 to an arbitrary value; this is what is done using the “Threshold” operation in image editing software such as GIMP and Photoshop.

This produces the following effect, which when trying to mimic the original image as closely as possible is not ideal:

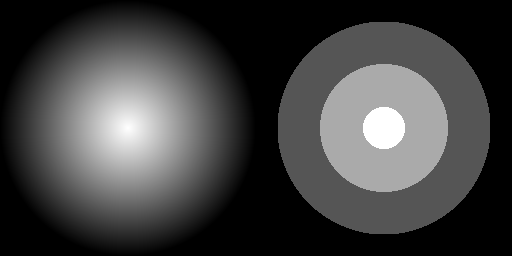

We can of course change the number of levels used during quantization, but of course this comes at the cost of having to have more bits per pixel. For example, here is the same example when quantizing pixels to the nearest possible luminosity using 2 bits per pixel:

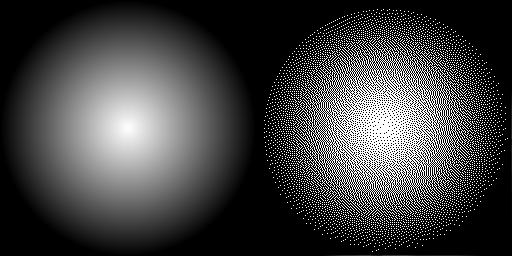

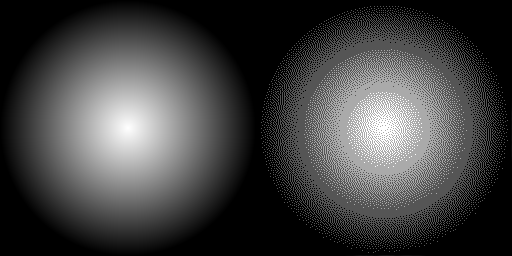

But if trying to match the source image as closely as possible is our goal, we can do better. Imagine each pixel before and after quantization; we can calculate the quantization error by subtracting the quantized value from the original value. In the first example, an input value of 0 maps to 0, so the error is 0. Similarly 1 maps to 1 so the error is also 0. A value of 64 however maps to 0, so our error is 64 in this case. While we don’t have enough bits to do anything about this for the current pixel, we can use the neighbouring pixels to distribute this error over a wider area. This is how Floyd-Steinberg (and other) dithering algorithms work.

To see the effect, here is the first example above using Floyd-Steinberg dithering:

Each pixel is still only one bit (black or white), however the quantization error for each pixel has been distributed across the image resulting in an image that more closely matches the original. Of course we can increase the available bits during quantization, so again here’s an example using 2 bits per pixel:

Feel free to play with the demo; you can also load other images via a URL to see how it works on other examples.

Performance

As with the previous example, there is not a huge difference between the performance of the WebAssembly module and the plain JavaScript version. However, there does seem to be a slight performance gain using WebAssembly this time, especially in Firefox in Linux.

This could be due to the slightly increased complexity of the algorithm compared to the Mandelbrot set renderer. In the latter, each pixel is calculated independently and in series and only written, which is very easy to optimise. In this example however, the algorithm requires a read and write from the current pixel and four reads and writes to neighbouring pixels for every pixel in the image.

As the algorithm reads and writes to neighbouring pixels, care must be taken to ensure that out-of-bounds array accesses cannot occur. Interestingly, if the algorithm is modified so that this safety is not enforced, the plain JavaScript version is slowed significantly. This indicates that the browser JIT compiler is either capable of optimising the bounds checks out completely when it detects that the code is written correctly, or that bounds checking causes the observed overhead only when an out-of-bound read/write occurs.

Conclusion This example proves yet again how well modern browsers are able to optimise JavaScript code. It also proves that even though WebAssembly support is extremely new (as of writing), performance in both Chrome and Firefox is on-par with the browser’s best optimisations to JavaScript code for these simple algorithms, which is excellent.

I can only imagine that WebAssembly will continue to be optimised, and it will be very interesting to throw threading into the mix as soon as it is supported.